Multi-Cloud Data Engineer : The Future of Data Engineering

Introduction

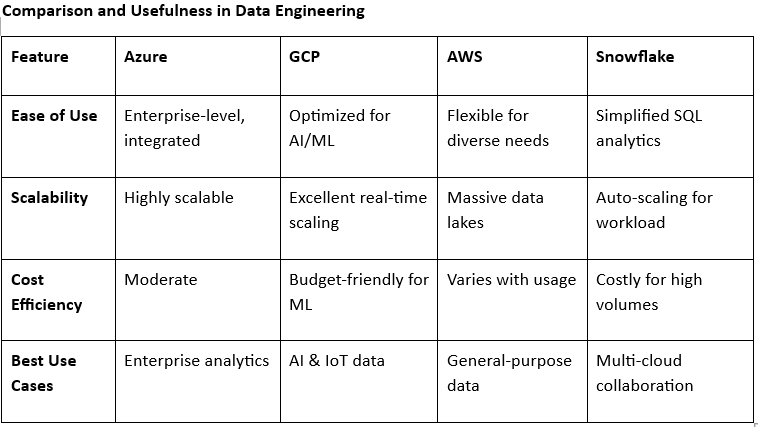

As the volume of data grows exponentially, the demand for flexible, scalable, and reliable solutions is driving the rise of multi-cloud data engineering. This approach leverages multiple cloud platforms Azure, GCP, AWS, and Snowflake to build, manage, and optimize data pipelines. In this blog, we’ll explore the roles of these platforms, how they complement each other, and why multi-cloud expertise is becoming indispensable in the data industry.

1. What is Multi-Cloud Data Engineering?

Definition:

Multi-cloud data engineering involves the integration of data processing and storage across multiple cloud platforms to take advantage of each platform's unique capabilities.

Why it Matters:

- Reduces vendor lock-in.

- Increases flexibility in choosing the best tools for specific workloads.

- Ensures higher availability and disaster recovery by distributing workloads.

Here's the diagram illustrating Multi-Cloud Data Engineering and its components. It visually represents how Azure, AWS, GCP, and Snowflake integrate seamlessly to enable efficient data engineering workflows.

The diagram showcases the concept of Multi-Cloud Data Engineering by illustrating how different cloud platforms—Azure, AWS, GCP, and Snowflake—work together to handle various data engineering tasks. Let me break it down for you:

Central Theme

- "Multi-Cloud Data Engineering" is highlighted in the center, emphasizing its importance as a unified approach to data management.

- This is the hub around which the entire ecosystem revolves, connecting the strengths of individual cloud platforms.

Cloud Platforms

Each cloud platform is depicted as a unique cloud icon with its specific services listed below:

- Azure (Blue Cloud):

- Services like Azure Data Factory, Azure Synapse Analytics, and Azure Databricks are represented, showing Azure's strengths in ETL processes, data warehousing, and AI/ML integration.

- Focus: Seamless integration with enterprise systems and analytics.

- AWS (Orange Cloud):

- Includes tools such as AWS Glue, Amazon S3, and Amazon Redshift to represent its robust ETL, storage, and analytics capabilities.

- Focus: Broad toolset and scalability for diverse workloads.

- GCP (Green Cloud):

- Highlights BigQuery, Dataflow, and Dataproc, underlining its analytics-first approach and real-time data processing capabilities.

- Focus: Efficient, AI-driven analytics and cost-effectiveness.

- Snowflake (Purple Cloud):

- Represents Snowflake Data Warehousing, showcasing its multi-cloud compatibility and high-performance data storage/analysis.

- Focus: A unified data platform for cross-cloud operations.

Connectivity and Data Flow

- Arrows between the platforms depict data flow, collaboration, and integration across these systems.

- Example: Data might flow from AWS S3 (Storage) to BigQuery (Analytics), then to Snowflake (Warehousing) for centralized reporting.

Background Theme

• The digital pipeline and node-like structure in the background emphasize:

- The connectivity between these platforms.

- How data pipelines work in a distributed, multi-cloud architecture.

Key Takeaways:

- Azure is ideal for businesses using Microsoft tools and requiring enterprise-ready analytics.

- AWS offers the broadest range of services for flexibility and scalability.

- GCP specializes in analytics-heavy applications and cost efficiency.

- Snowflake serves as the glue for multi-cloud data warehousing.

This diagram visually conveys the synergy and complementary strengths of a multi-cloud strategy, demonstrating its critical role in modern data engineering workflows.

2. ☁ Cloud Data Engineering: Platforms and Roles

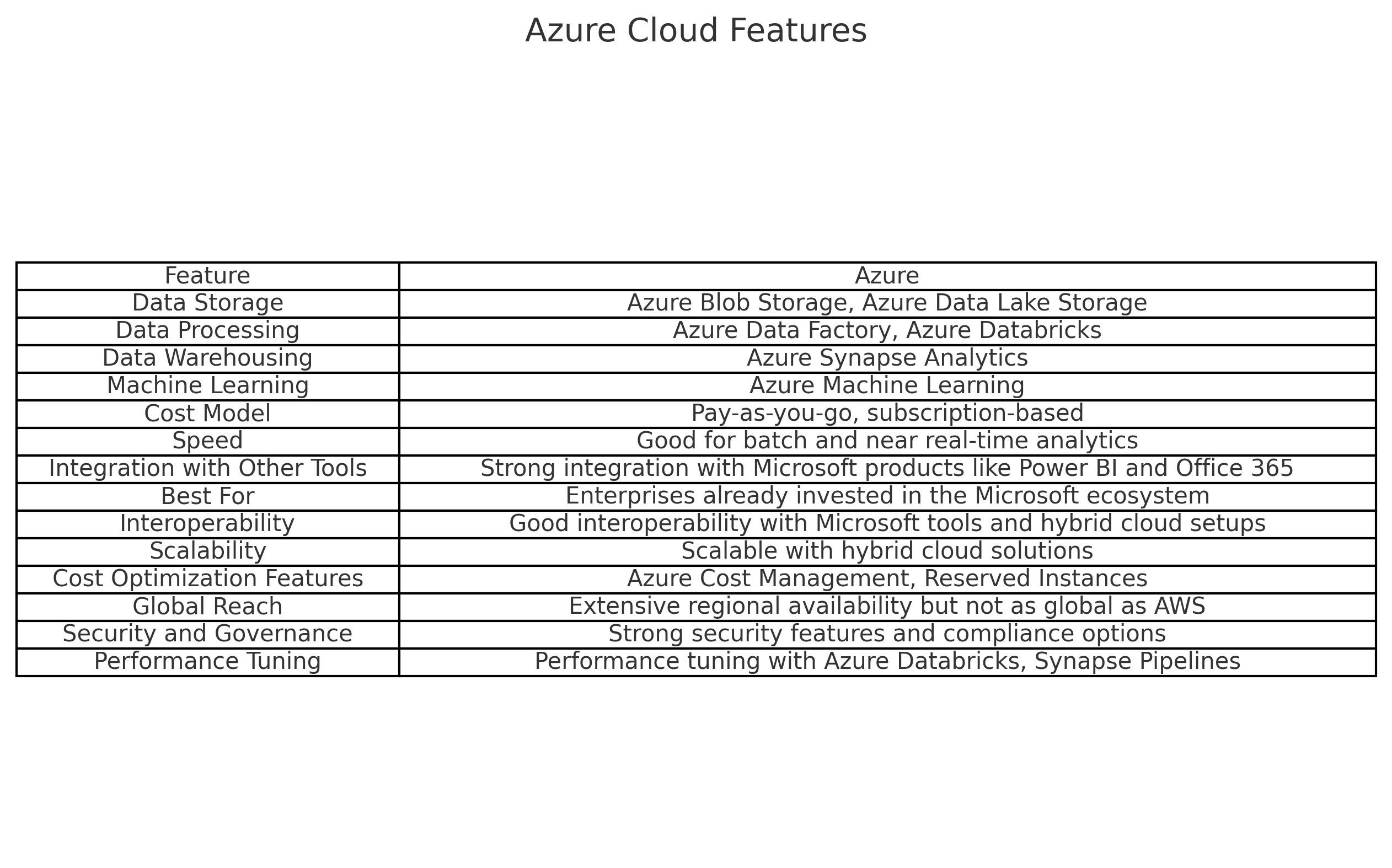

🔵 Azure Data Engineer

What is it?

An Azure Data Engineer specializes in building, managing, and optimizing data pipelines and systems on the Microsoft Azure Cloud Platform.

A professional skilled in managing data workflows using Microsoft Azure's ecosystem for data storage, integration, processing, and analytics.

Key Tools & Services:

- Azure Data Factory (ETL pipelines)

- Azure Databricks (Big Data & Machine Learning)

- Azure Synapse Analytics (Data Warehousing & Analytics)

- Azure Data Lake Storage (Data Storage for analytics)

- Azure Stream Analytics (Real-time data processing)

Usefulness in Data Engineering:

- Real-time sales data analysis.

- Predictive analytics using Azure ML.

- Data transformation and integration for reporting.

- Build scalable pipelines for big data.

- Integrate real-time and batch data for analytics.

- Facilitate AI/ML workloads using integrated Azure ML tools.

Cost:

- Pay-as-you-go model, e.g., Azure Data Factory pipelines start at $1 per 1,000 activity runs.

- Costs vary based on storage, compute, and data transfer requirements.

- Depends on services like storage, compute, and data transfer.

Example:

- Azure Data Factory pipeline: $0.25/hour (approx).

- Azure Synapse Analytics: Starts at $5/hour for small compute nodes.

Future Outlook:

- Strong focus on enterprise-level solutions and AI integration.

- Strengths:

- Enterprise-friendly tools for data processing and AI.

- Strong integration with Microsoft products like Power BI and Dynamics.

- Best Fit:

- Enterprises seeking seamless integration with existing Microsoft ecosystems.

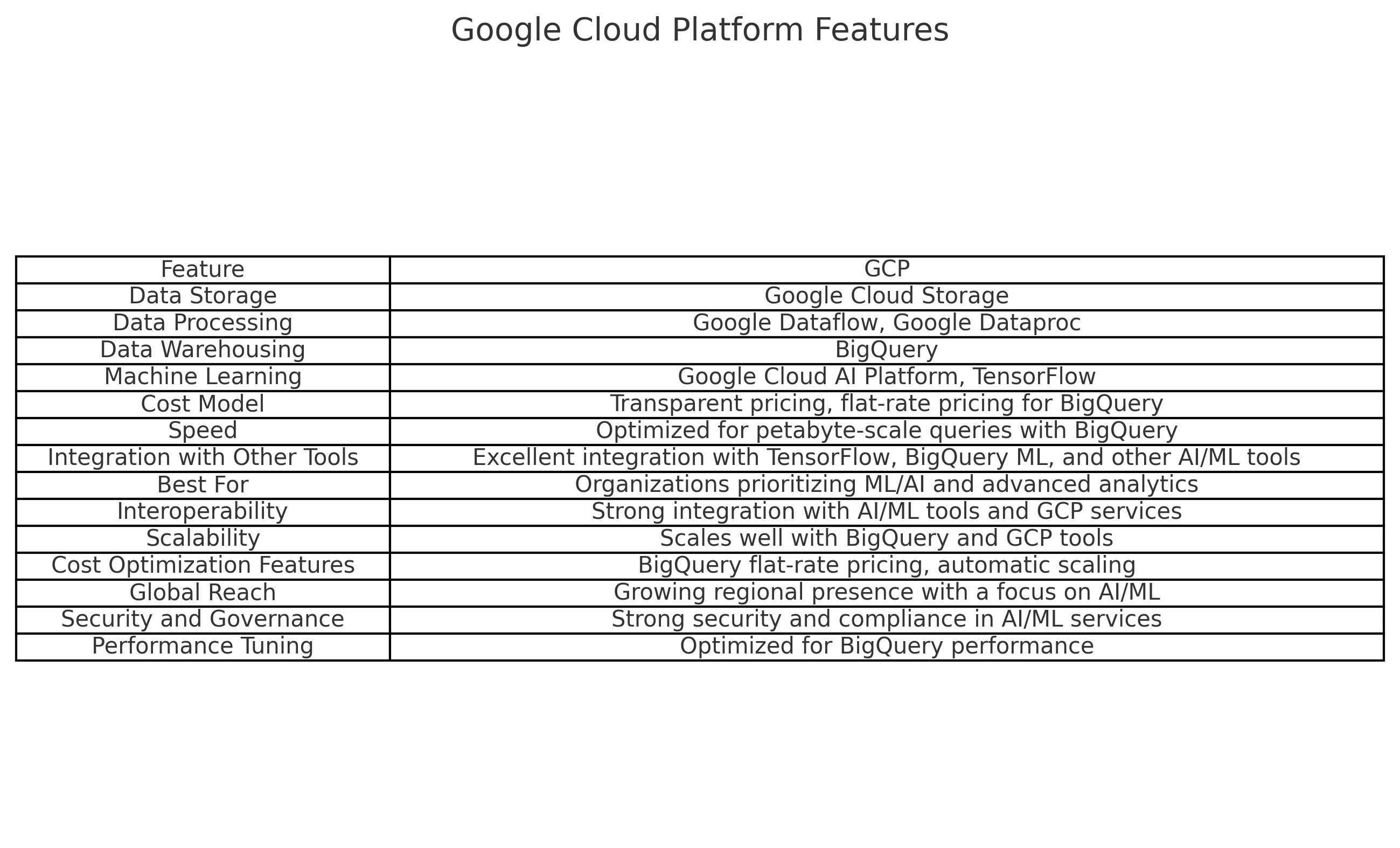

🟢 GCP Data Engineer

What is it?

- A professional focused on managing data pipelines and analytics on Google Cloud Platform, leveraging its machine learning and AI strengths.

Key Tools & Services:

- BigQuery (Serverless data warehouse)

- Cloud Dataflow (Stream & batch data processing)

- Cloud Pub/Sub (Real-time messaging)

- Cloud Storage (Scalable data storage)

- Looker (BI visualization)

Usefulness in Data Engineering:

- Processing real-time IoT data.

- Powering recommendation systems.

- Large-scale data warehousing with BigQuery.

- Ideal for real-time data streaming and AI integrations.

- Simplifies querying with BigQuery's serverless architecture.

Cost:

- BigQuery: $5 per terabyte scanned for queries.

- Dataflow : Costs depend on the number of resources and duration.Starts at $0.01 per GB processed.

Future Outlook:

- GCP excels in real-time data analytics and machine learning applications.

- GCP is gaining traction for AI/ML-centric organizations, making it a top choice for analytics-heavy use cases.

- Strengths:

- Leader in AI and machine learning.

- Cost-effective for big data querying with tools like BigQuery.

Best Fit:

Organizations focusing on analytics-heavy and AI/ML-driven applications.

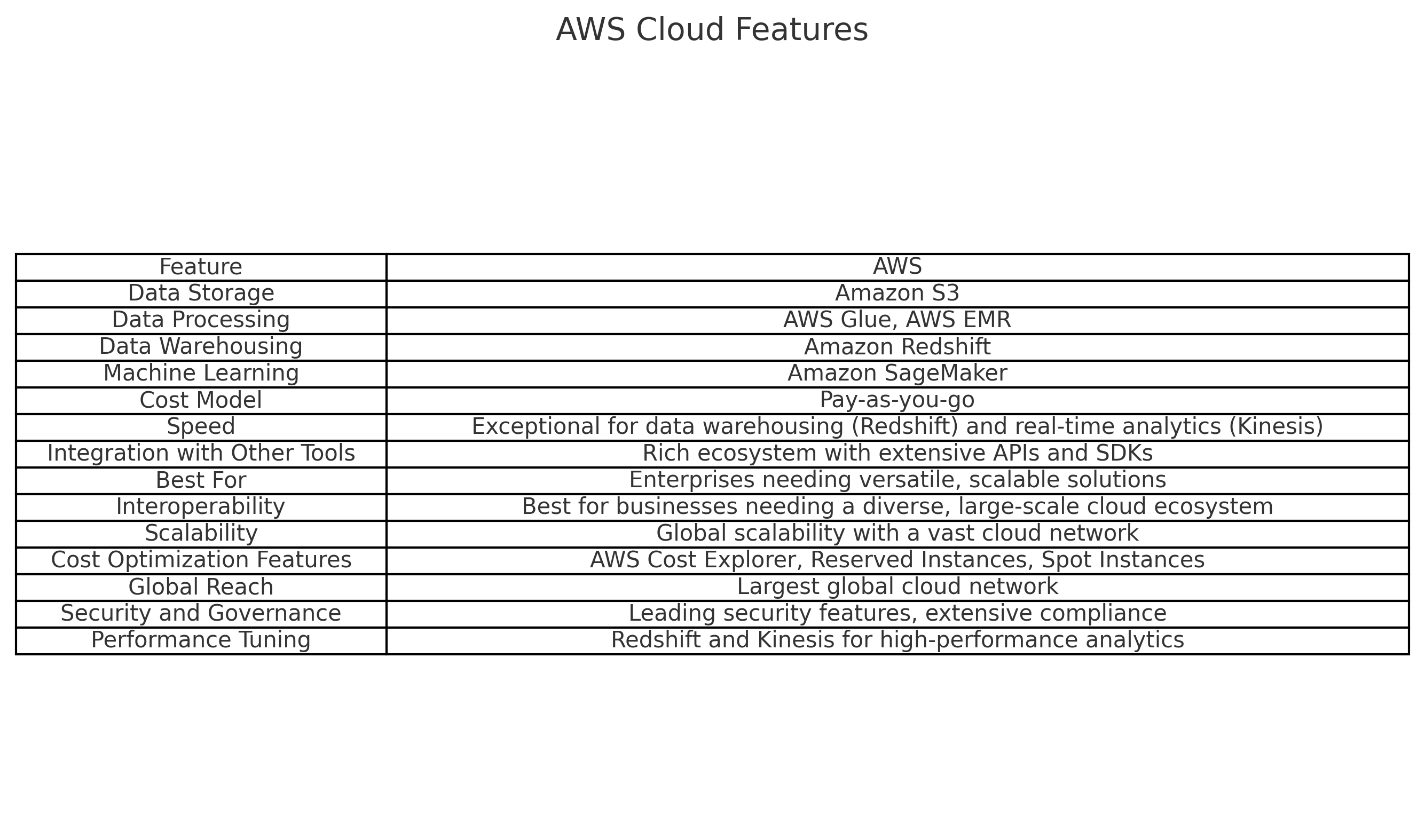

🟠 AWS Data Engineer

What is it?

An AWS Data Engineer uses the Amazon Web Services (AWS) platform to build, transform, and manage data workflows.

Key Tools & Services:

- Amazon Redshift (Data warehousing)

- AWS Glue (ETL service)

- Amazon S3 (Object storage)

- Amazon Kinesis (Real-time data processing)

- Athena (Serverless query service)

Usefulness in Data Engineering:

- Real-time stock market analysis.

- Building scalable data lakes.

- Cloud-native analytics pipelines.

- Excellent for scalable and cost-efficient storage (S3).

- Provides serverless ETL solutions with AWS Glue.

- Supports streaming and real-time analytics with Kinesis.

Cost:

- S3 Storage: Starts at $0.023 per GB per month.

- Redshift: Starts at $0.25 per hour for on-demand compute nodes.

Future Outlook:

- AWS remains dominant for scalable and cost-effective cloud solutions.

- AWS's first-mover advantage and rich ecosystem ensure its continued relevance, especially for startups and enterprises.

- Strengths:

- The largest cloud provider with a wide range of tools.

- Flexible and cost-effective storage and compute options.

Best Fit:

- Startups and enterprises looking for a broad ecosystem of tools.

❄️ Snowflake

What is it?

Snowflake is a cloud-native data platform offering a multi-cloud strategy (available on Azure, AWS, and GCP).A cloud-native data warehouse platform designed for ease of use, scalability, and high-performance analytics across multiple clouds.

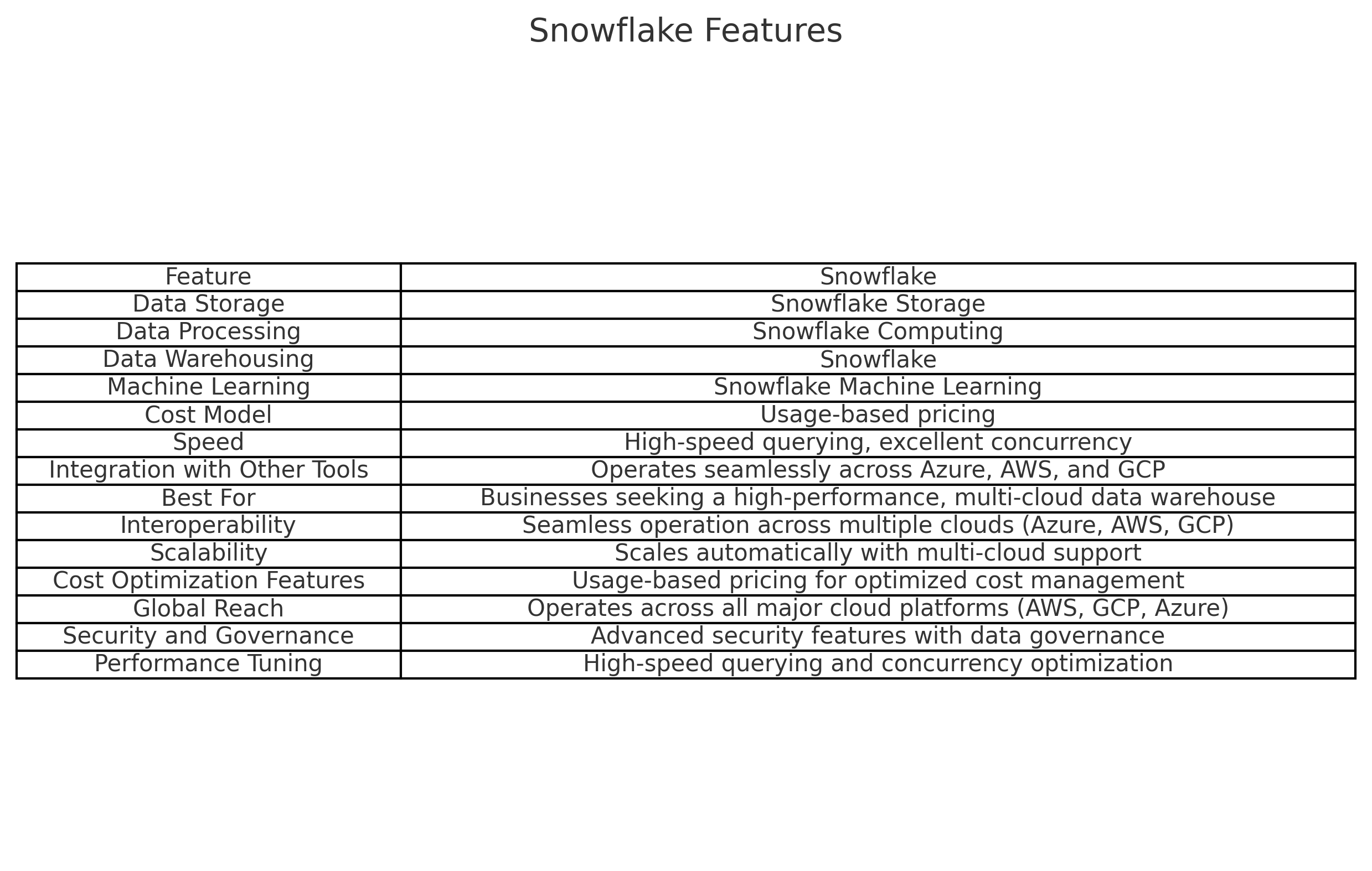

Key Features:

- Fully managed Data Warehousing.

- Supports structured & semi-structured data (e.g., JSON).

- Easy-to-use SQL interface.

- Multi-cloud support (Azure, AWS, GCP).

- Automatic scaling and optimization.

- Separates storage and compute for cost efficiency.

- Core Features:

- Separation of Compute and Storage: Pay only for what you use.

- Support for Semi-Structured Data: Easily handles JSON, Avro, and Parquet.

Usefulness in Data Engineering:

- Centralized data lake for cross-cloud analytics.

- Data sharing between organizations.

- Real-time dashboard reporting.

- Query structured and semi-structured data (JSON, Parquet).

- Seamlessly integrates with tools like Tableau and Power BI.

- Efficient for data sharing and collaboration.

Cost:

- Compute: Charged per second of usage, starting at $2 per credit.

- Storage: $23 per TB per month.

Future Outlook:

- Snowflake is increasingly adopted for multi-cloud analytics.

- Snowflake is highly favored for its simplicity and ability to operate across clouds, making it a popular choice for modern data warehouses.

- Strengths:

- Multi-cloud compatibility with Azure, GCP, and AWS.

- Simple, scalable, and high-performance data warehousing.

Best Fit:

Teams requiring multi-cloud data warehousing with minimal maintenance.

3. Why Multi-Cloud Expertise is Crucial

- Reduces Risk: Avoids dependence on a single provider, mitigating risks of outages or pricing changes.

- Optimizes Costs: Use cost-effective services from different providers for specific tasks.

Example: Storage on AWS (S3) + Analytics on GCP (BigQuery).

- Enhances Performance: Select the best platform based on performance needs (e.g., Snowflake for warehousing, Azure for AI).

- Improves Disaster Recovery: Data can be replicated across multiple clouds, ensuring continuity during outages.

4. The Role of a Multi-Cloud Data Engineer

- Responsibilities:

- Design pipelines that utilize services from multiple cloud providers.

- Integrate data between Azure, GCP, AWS, and Snowflake.

- Ensure data security and compliance across platforms.

- Monitor and optimize multi-cloud workloads.

- Skills Needed:

- Proficiency in cloud services (Azure, AWS, GCP).

- Expertise in SQL, Python, and distributed data systems.

- Familiarity with APIs and data transfer tools (e.g., Apache NiFi, Airflow).

5. Use Cases for Multi-Cloud Data Engineering

Case Study: Retail Analytics

- Challenge: Analyze customer behavior in real-time while keeping storage costs low.

- Solution:

- AWS S3: Store raw transaction logs.

- Azure Synapse Analytics: Perform predictive analytics.

- Snowflake: Provide a centralized warehouse for cross-cloud reporting.

Case Study: Disaster Recovery

- Challenge: Ensure high availability of critical applications.

- Solution:

- GCP Dataflow: Real-time data processing.

- AWS S3: Backup data storage.

- Azure Blob Storage: Redundant storage for failover.

6. Is Multi-Cloud the Future of Data Engineering?

Trends Indicating Growth:

- Hybrid Cloud Solutions: Organizations are combining on-premises and cloud systems with multi-cloud architectures.

- Vendor Competition: Providers are adding multi-cloud compatibility (e.g., Snowflake’s cross-cloud support).

- Global Reach: Companies require multi-region solutions to comply with data regulations.

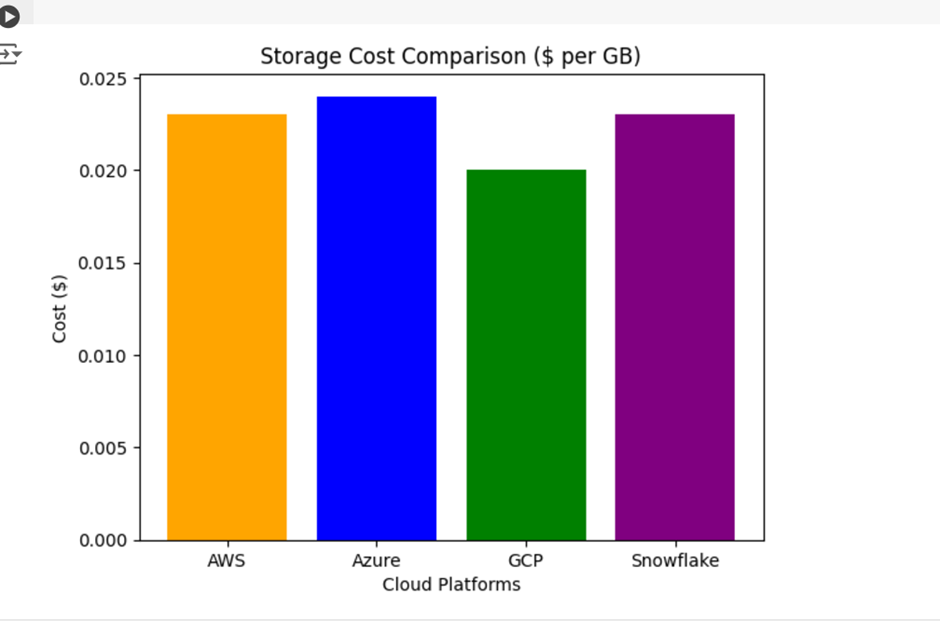

7. Visualizing Multi-Cloud Workflows

Use tools like Matplotlib or Seaborn to create visualizations of:

- Cost comparison across clouds.

- Performance benchmarks.

- Data flow between systems.

Example code snippet:

python:

import matplotlib.pyplot as plt

platforms = ['AWS', 'Azure', 'GCP', 'Snowflake']

# Example data for storage costs

costs = [0.023, 0.024, 0.02, 0.023]

plt.bar(platforms, costs, color=['orange', 'blue', 'green', 'purple'])

plt.title('Storage Cost Comparison ($ per GB)')

plt.ylabel('Cost ($)')

plt.xlabel('Cloud Platforms')

plt.show()

Conclusion:

Multi-cloud data engineering is revolutionizing the way organizations manage and analyze data. By leveraging the strengths of Azure, GCP, AWS, and Snowflake, businesses can achieve unprecedented flexibility, cost efficiency, and resilience. For data engineers, gaining expertise in multi-cloud environments is no longer optional—it’s essential to staying competitive in the rapidly evolving data industry.